NVIDIA H100 Tensor Core GPUs: Revolutionizing AI Performance for Large Language Models

NVIDIA H100 GPUs deliver unparalleled AI performance for large language models, revolutionizing the industry with unmatched speed and efficiency.

Introduction

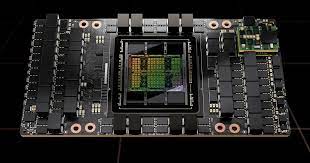

NVIDIA’s H100 Tensor Core GPUs are taking the AI industry by storm, delivering unparalleled performance, especially when it comes to Large Language Models (LLMs) that power generative AI. Recent MLPerf training benchmarks confirm the outstanding capabilities of the H100 GPU, achieving new records in all eight tests, including a breakthrough test for generative AI. This article introduces the H100 GPU’s impressive performance, scalability in large server environments, and the expanding NVIDIA AI ecosystem.

Unparalleled Performance on MLPerf Training Benchmarks:

The H100 GPU dominates all benchmarks in the MLPerf Training v3.0 test, emerging as the undisputed leader in AI performance. Whether it’s large language models, recommenders, computer vision, medical imaging or speech recognition, the H100 GPU consistently outperforms the competition. In fact, NVIDIA was the only company to run all eight tests, highlighting the versatility and power of the NVIDIA AI platform.

Scalable Performance for AI Training:

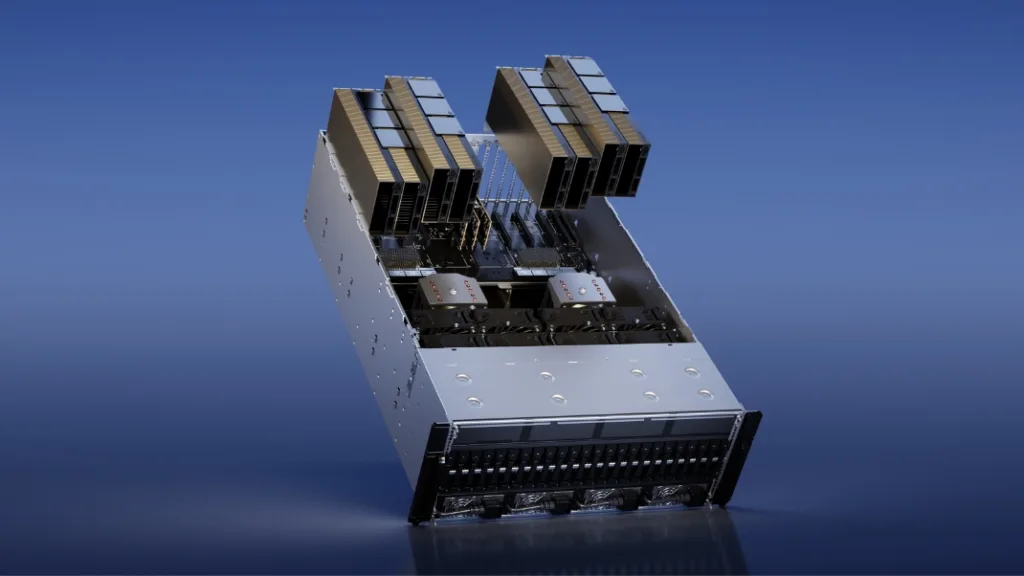

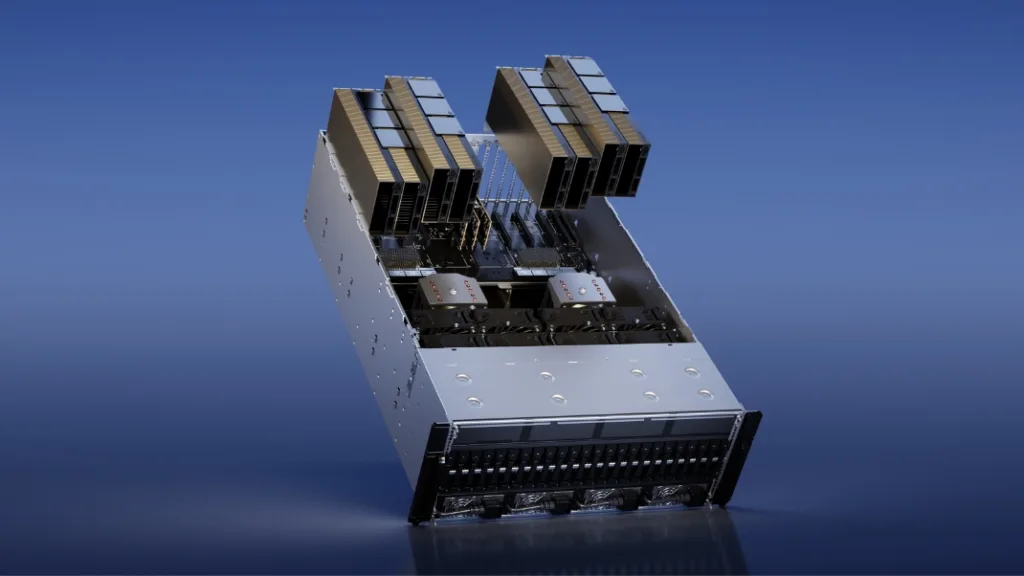

Training AI models typically involves harnessing the power of multiple GPUs working together. The H100 GPUs have set new performance records in at-scale AI training on every MLPerf test. With optimizations across the entire technology stack, the H100 GPUs have achieved near-linear performance scaling, even as the number of GPUs used scaled from hundreds to thousands. This scalability has been exemplified by CoreWeave, a cloud service provider that operates a cluster of 3,584 H100 GPUs, completing the massive GPT-3-based training benchmark in less than eleven minutes.

Efficiency and Low-Latency Networking:

One of the distinguishing factors of the H100 GPUs is their efficiency at scale. CoreWeave’s cloud-based performance has matched that of an AI supercomputer running in a local data center, thanks to the low-latency networking provided by NVIDIA Quantum-2 InfiniBand. This achievement showcases the power of NVIDIA’s ecosystem and the seamless integration of H100 GPUs in both cloud and on-premises server environments.

A Growing NVIDIA AI Ecosystem:

The MLPerf benchmarks have witnessed the participation of nearly a dozen companies on the NVIDIA platform, reaffirming the industry’s broadest ecosystem in machine learning. Major system makers such as ASUS, Dell Technologies, GIGABYTE, Lenovo, and QCT have submitted results on H100 GPUs, providing users with a wide range of options for AI performance in both cloud and data center settings. The robust NVIDIA AI ecosystem assures users that they can achieve exceptional performance and scalability for all their AI workloads.

Energy Efficiency for a Sustainable Future:

As AI performance requirements continue to increase, energy efficiency becomes crucial. NVIDIA’s accelerated computing approach enables data centers to achieve remarkable performance while using fewer server nodes, leading to reduced rack space and energy consumption. Accelerated networking further enhances efficiency and performance, while ongoing software optimizations unlock additional gains on the same hardware. Embracing energy-efficient AI performance not only benefits the environment but also accelerates time-to-market and enables organizations to develop more advanced applications.

Conclusion:

NVIDIA’s H100 Tensor Core GPUs have redefined AI performance, especially for large language models and generative AI. Their dominance in the MLPerf training benchmarks, combined with their scalability, efficiency, and integration within the expanding NVIDIA AI ecosystem, solidifies their position as the go-to choice for AI workloads. With unmatched performance and continuous optimizations, the H100 GPUs empower organizations to unlock the full potential of AI and drive innovation across various industries.

NVIDIA DGX Cloud: Supercharge Generative AI» SamkarTech 2 years ago

[…] Also read | NVIDIA H100 Tensor Core GPUs: Revolutionizing AI Performance […]

Reply